Microkernels and the Future of Operating Systems

August 2006

Operating systems have become the veritable backbone of corporate, educational, and home settings. Most daily computing is based upon operating system capabilities and standards. Secure, reliable, and scalable systems are necessary for future growth, maintenance, and provision of services and operating systems must rise to the challenge. One powerful method is the new highly modular, operating system-neutral abstractions called microkernels, upon which operating system servers can be built. Microkernels promise to provide security, reliability, scalability, and portability to a degree that is impossible with traditional monolithic operating systems. These advantages are mandatory due to the requirements for future growth and the industry must move in their direction. However, issues with performance and acceptance of microkernels must be overcome.

Introduction

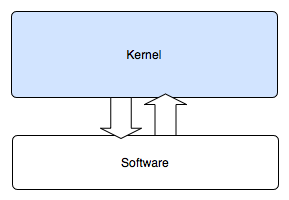

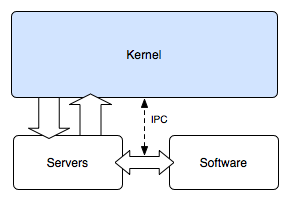

The kernel is the core part of an operating system (OS) responsible for the management of the system's resources and the communication between hardware and software [7]. While kernels are not required for system function, as programs can be run directly on hardware, its abstractions are extremely useful for the simplicity of coding [2]. Code implemented in user-space does not require an intimate knowledge of hardware, the operating system calls do the work of hardware communication. Also the critical need for time-sharing and resource management in a multi-program or even multi-user environment calls for highly privileged mediation between processes. This operating system task is typically done in the kernel. Monolithic kernels, such as UNIX, Linux, Windows 9x series, VMS, and Mac until recent versions, have been the majority voice in the OS realm [12]. They are defined by the fact that all operating system services such as device drivers, virtual memory, file systems, networking, and CPU multiplexing are run in the kernel [2]. Microkernels delegate much of these services out to other programs called servers, see Figure 2. Distributed tasks are the primary advantage of the microkernel. It is from this distinction that microkernels achieve their strengths and discover their flaws. Other designs, such as exokernels, have taken this ideology one step further by sending all operating system functions to programs in user-space [7]. As can be seen from the microkernel example, this extension also has its weaknesses.

| Figure 1: Monolithic Design [12] | Figure 2: Microkernel Design [10] |

|

|

Security

One of the most imperative challenges of the digital financial age is the issue of security. As valuable resources are increasingly electronic, the need for safe and protected environments ever rises. Microkernels offer several methods of improving OS security by protecting systems through device driver isolation, lower bug count, safeguarding services, and better security policy enforcement.

Since the kernel code is, by definition, the highest privileged program, minimizing the amount of code that runs as kernel can minimize the damage able to be done by error or by malicious code. Fault isolation is difficult in monolithic machines as the operating system does not have isolation between components [13]. In fact,

a modern operating system contains hundreds or thousands of procedures linked together as a single binary program running in kernel mode. Every single one of the millions of lines of kernel code can overwrite key data structures that an unrelated component uses, crashing the system in ways difficult to detect. In addition, if a virus or worm infects one kernel procedure, there is no way to keep it from rapidly spreading to others and taking control of the entire machine [13].

Thus, there are many reasons why it is better to run as little code in kernel mode as possible. One primary area of concern is device drivers. As a consequence of the huge variety of devices, drivers are typically of third party origin and will likely remain that way in the future. The need to write drivers for every device for every operating system is a task so large that only distributed methods are reasonable. Yet, third party sources are frequently of undisciplined or untested origin as there are no mandatory standards that must be met to post code on the internet or to make drivers available for download. By driver installations, code that is untested or lower in quality is introduced into the system with error rates that are often three to seven times higher than ordinary code [13]. In addition, the possibility of malicious code hijacking the system is much greater when large amounts of highly privileged code is available to be compromised. Microkernels seek to limit the damage of bugs and the scope of potentially malicious code by keeping device drivers in user-space.

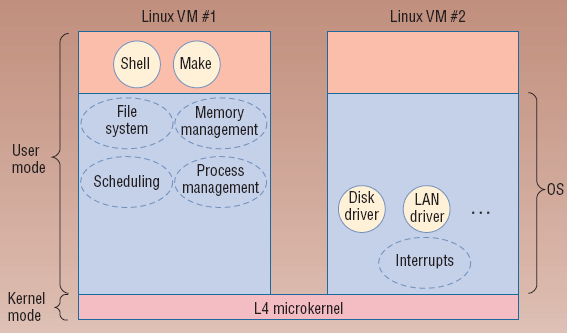

Device driver containment can be done by a variety of techniques. The Paravirtual Machine model, as attempted by a research group at the University of Karlsruhe, built the L4 microkernel and ran multiple copies of a specialized version of Linux on top of it [13]. One virtual machine ran the application programs while other machines ran the device drivers. As device driver machines crash, only one virtual machine goes down, not the entire system. This comes at a performance hit of about 3 to 8 percent [13]. While the probability of device driver error is still the same, the consequences of failure are not. Faults are isolated to a single virtual machine, which can often be reloaded easily, instead of the main system. Thus device drivers may crash and be temporarily unavailable, but the rest of the system can continue productively. The main user workspace is not damaged and generally the most important data would be undisturbed. Even malicious takeover becomes more limited in its scope by being restricted to a single, non-critical virtual machine.

Figure 3: Paravirtual Model [13]

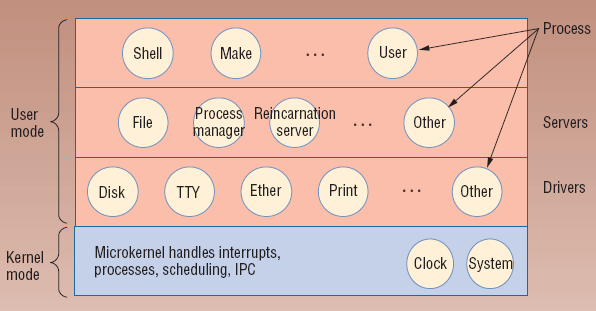

Another technique, Multiserver Architecture, as performed in Minix 3, runs device drivers in a separate layer above the microkernel. Each has private address space managed by the memory management unit. Drivers must make calls to the kernel to obtain its services. This assures that the verified kernel, and not third party drivers, make all of the critical changes. This is done in a multi-layer approach as can be seen in Figure 4. Application processes are run in the highest layer and make system calls to servers, as opposed to the kernel in the monolithic model. These servers handle file system, process management, and other typical microkernel servers. Device drivers are another layer down and have a separate process and address space for each, controlled by the memory management unit. Cameras, disks, video, audio, printing, and Ethernet all run in user mode and generate interrupts to complete privileged calls to the kernel. The reincarnation server kills stalled or crashed processes and drivers and restarts them. Thus individual drivers can fail without a system wide crash. The reincarnation server allows for fluid recovery. All processes and drivers are in non-privileged user space and the amount of damage they can perform is severely limited.

Figure 4: Multiserver Architecture [13]

Another security feature is minimizing bugs from the kernel code itself. When Linux was first released on 17 September 1991 it contained 8,196 lines of code. This has exploded with later versions to where the 15 August 2005 release contained 4,686,186 lines [8]. Every line increases the complexity and possibility of error as well as making verification and understandability much more difficult [13]. Several studies have estimated the typical number of bugs per code at six bugs per 1,000 lines [13]. This is contrasted to later versions of the microkernel Mach at 44,000 lines [9]. The highly successful L4 family contains an image size of only 40 to 200 kB depending on implementation [1], compared to the 189 MB of the 15 August version of Linux [8]. While many microkernels are not tiny, they are still significantly smaller than their monolithic counterparts. The probability of bugs is considerably reduced by the fact that far less code is necessary. As with device driver problems, bugs in application or server code have far less damage and security potential as they do in the kernel.

As bugs are reduced and third party code eliminated, microkernels also protect core services from interfering with each other by running in individual protected address spaces instead of in kernel space [13]. These services can be forced by the true kernel to follow security policies. In monolithic designs, all kernel code has free reign to change all other kernel code. As these sections are isolated and managed, they no longer have the unrestricted access to each other that they did before. Thus, microkernel design has a huge potential to achieve the higher grade of security necessary in computing today.

Reliability

Besides security, reliability often has the most impact to a system. It is certainly the aspect users notice most. Microkernel designs seek to defend reliability by capitalizing on the same isolation that aided security. As mentioned before, smaller code allows more verification and understandability, preventing bugs that are not only insecure but often damage the stability of the system. With different address space for not only applications but servers, the benefits reaped from the rise of traditional operating systems in application protection can now be largely applied to the operating system servers as well. Advanced techniques also enable additions to be made to the kernel without restarting and even the recovery of individual modules without affecting the entire system. Many have touted the idea that "since practically all of the functions of the OS run in user space, everything can be changed on the fly" [3]. As in a normal OS, where applications are started, terminated, or modified, server processes can now do the same. A problematic service can be restarted as opposed to an OS system crash [2]. Abilities such as these can help to significantly reduce downtime and the need to terminate the entire system. This is a key feature in the microkernels tested such as L4 and SPIN [4]. Kernel extensions allow "even on-line modification of the kernel" [4]. The fact that these modules can be updated and restarted without a system restart also increases the amount of time the system is running. Bad modules, that before required a maintenance period in a traditional OS, can now be patched or replaced almost invisibly. The elimination of the need for a maintenance window gives a greater flexibility to patching. Patches can be applied at the module level almost immediately and those affecting the kernel will be far fewer as kernel responsibilities are small and the code is thoroughly tested.

Scalability

Many of the features that enable better reliability also allow scalability to prosper. Dynamic adjustments can be used for expansion. The modularization of key resources permits the complexity to be distributed. The lower kernel memory footprint makes more room for services. Symmetric multiprocessor systems (SMP) management is better and thus more hardware can be integrated to provide the ever increasing demand for computing resources. Dynamic configuration allows additional resources to be assimilated transparently. Future growth will depend upon systems ability to expand.

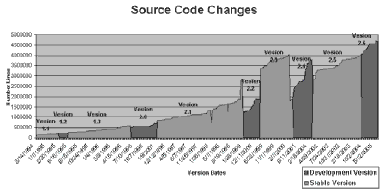

The distribution of complexity is one of the key requirements in the future of technology production. As systems become increasingly complicated and as the scale of computing grows, no single person, machine, or system can handle the responsibility. Even though hardware performance has doubled approximately every 18 months [6], the need for resources ever rises. Microkernels modularize OS components. Instead of one tightly integrated piece of software, requiring the close coordination of a massive team, each module can be worked on independently as long as its requirements and conditions are met. As complexity and the number of tasks the OS must perform increases, new modules can be added piecemeal or individually instead of the need for a complete rewrite. Much like object oriented programming has changed the face of software design, microkernels could change the method of OS development. The amount of source code has been increasing exponentially, note Figure 5. The ability to write code must be distributed. The microkernel's expandability is a rich asset and the traditional OS brands are fighting to keep up with the current pace.

Figure 5: Linux Code Size [8]

Better SMP utilization is another benefit of the microkernel design. Multiprocessor optimization relies on the degree of parallelism achieved. Kernel code is not a monolithic thread, so multiple processors can be used for both application and OS services [2]. The overhead from bookkeeping can be spread out to individual servers and other processes can be run independently as opposed to the inhibition of other kernel services in the single thread model. As grids, multiprocessors, and dual core technology increases in popularity, microkernels are poised to take advantage of the extra processing power. The parallelism achievable in the SMP design is more fully used by the software.

Dynamic configuration is a tool that not only provides longer uptime for the reliability of systems but also the integration of additional resources. Brown says, "Services can be changed without need to restart the whole system" [2]. Initiatives, such as in modern Linux kernels, to be able to load modules and reconfigure dynamically allow additional services or hardware to be added. Work is also being done on the dynamic adjustment of other scalability-relevant parameters [14]. The push to track processors, adjust lock primitives at runtime (including full deactivation of kernel locks), modify lock granularity, and resource usage variability will all result in simpler scalability. The topic of tracking processors has been discussed above. Adjusting kernel locks and their granularity greatly affects the ability to use more processes effectively. Kernels that can slowly change granularity and the permissiveness of locks will be able to fine-tune to increasing numbers of processes without the need for restructuring. The provision of a scalable translation-look-aside buffer (TLB) to reduce inter-processor interference for concurrent memory will aid in use of ever increasing memory. All of these points are currently being researched by groups such as the L4Ka Project [14].

Portability

The increasing diversity of systems makes portability a growing concern. Legions of different hardware devices exist, endless variations in requirements are necessary for diverse organizations. Legacy standards are also to be considered given the high cost of buying all new systems. Microkernels such as Mach are "OS neutral" and have the ability for various operating systems to be hosted on top of them [2]. The L4 microkernel is often used to host various Linux virtual machines [13]. The capability to use microkernels in a variety of circumstances will ensure that they are more widely accepted. A common microkernel base may simplify computing environments with multiple operating systems. The ability to share hardware capabilities, much as virtual machines are attempting to do, gives a greater flexibility to what can be run on a particular machine.

Issues: Performance

Microkernels have many advantages; however, some issues have been discovered when migrating to the micro design. The most criticized of these is performance. Microkernels often experience an 8-10% performance hit despite the streamlined modularity [2, 4, 12]. The majority of the performance problems are due to the additional context switching of system calls while running in non-kernel mode. Remote procedure calls now in user mode as opposed to kernel mode give an increase to 200 microseconds per call from the 40 microseconds per traditional call [4]. Longer time requirements due to inefficiency create slowdown. Other reasons such as the increased cost of memory references due to module interference and lesser locality of kernel code also affect performance. The inter-process communication (IPC) cost of remote calls is the more costly [4].

This performance issue has been a large one for microkernels and one researched heavily by the L4 designers. Methods such as passing short messages via registers as opposed to the "double-copy" of copying to kernel space and then to the second module's space give performance gains. Single-copying of large messages instead of double-copy is also used for cases when buffering is unnecessary. Lazy scheduling and waiting until queues are queried before moving threads between them also increase IPC speeds by up to 25% [4]. The IPC cost comes not only from the context switch but also from the increased number of messages. Instead of a unified kernel, there are now many servers and a kernel all communicating with one another. Batch message passing and lazy scheduling help to make message passing as efficient as possible, thus the performance hit is not as great. Improvements like this must be made for microkernels to gain wide acceptance as moving to slower systems is an option many are not willing to take. Hardware gains do help to make up for inefficiencies. They have for monolithic kernels as well.

Issues: Acceptance

Another objective to be overcome by microkernels is the lack of a dominant, compatible OS. The transition from the current monoliths will take some time unless the companies that control them move in that direction. Even well known microkernels such as HURD, Mach, and the L4s are not widely used. The overlaying of popular operating systems sometimes requires one-time tweaks, preventing rapid or convenient deployment [13]. Ideas that are never adopted in the consumer market will not provide a quality improvement to the majority of users. However, companies such as Apple have taken the lead. Mac OS X Server and Mac OS X are built on Darwin, which is founded around the Mach 3.0 microkernel [5]. This provides "features critical to server operations, such as fine-grained multi-threading, symmetric multiprocessing (SMP), protected memory, a unified buffer cache (UBC), 64-bit kernel services and system notifications" [5]. Servers such as these can support Mac, Linux, and Windows clients. The more popular software integrates microkernel technology, the easier the acceptance will be. It seems far more likely that it will be the slow rewrite of the mainstream operating systems, than the independent use of published microkernels that eventually leads to widespread use.

Exokernels

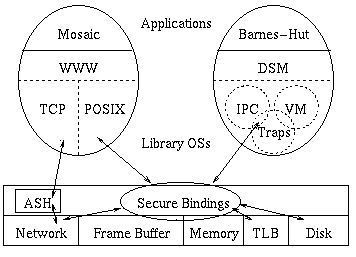

Exokernel, developed by MIT in 1994, takes the microkernel trend another step forward [4]. It attempts to implement nothing in kernel-space [2]. Its purpose is the safe multiplexing of hardware resources [2]. Things such as hardware events, timers, virtual memory, page faults, and other interrupts go to stubs that call user privileged processes to handle the situation. Instead of the implementation being in the kernel, it is passed on to user-space processes. This allows a great flexibility as it is thought that the application programs know the purpose of their resource demands the best [4]. For example, different schedules can be used simultaneously and selected depending on which is preferable [2]. High level functions are performed by libraries which are provided by the user and not trusted by the kernel [4]. To protect management of hardware resources, Exokernel uses secure bindings to control mapping to the TLB and to watch for things such as library OS cooperation. The kernel is responsible for freeing resources that may be trapped or misused by a library. Features such as the Application-specific Safe Handler (ASH) allow for effective use of particular resources. However, these handlers are not trusted and so must be secured by techniques such as sandboxing or type-safe languages [4].

Figure 6: Exokernel Architecture [4]

While Exokernel presents an even more extreme approach along the microkernel lines, it also suffers from the same issues more acutely. Context switching becomes very problematic when done for even such things as network ACKs [4]. This overhead could be dramatic and so development is being done to offset the impact. Concern has been expressed that many papers "do not discuss several key aspects of OSs, such as file systems" [4]. In the production world they may not have all of the abilities or services required. Exokernels will face the same acceptance problems as microkernels, perhaps to even a further degree, as it is more radically different from the traditional operating system.

Conclusions

Microkernels have the potential to provide huge gains in the area of operating systems. They have the ability for increased security, reliability, scalability, and portability. These methods are critical in the environment of computing today. The issues with performance are something that kernel research should work on to allow the use of microkernel advantages within the efficiency requirements needed for most tasks. The issue of acceptance can be overcome as microkernels gain influence and the public knowledge of them expands. More likely is the transition to more microkernel-like designs from the current monoliths as supported by dominant companies. The future of operating systems will benefit greatly from the work put into microkernels and the success of future systems depends on the integration of their ideas.

[1] "About L4." ERTOS. 20 June, 2006. National ICT Australia. 26 July, 2006. <http://www.ertos.nicta.com.au/research/l4/about.pml>

[2] Browne, Christopher. "Microkernel-based OS Efforts." Christopher Browne's Web Pages: Research and Experimental Operating Systems. 25 July, 2006. Cbbrowne.com. 25 July, 2006. <http://cbbrowne.com/info/microkernel.html>

[3] Browne, Christopher. "GNU Hurd." Christopher Browne's Web Pages: Research and Experimental Operating Systems. Cbbrowne.com. 25 July, 2006. <http://linuxfinances.info/info/hurd.html>

[4] Erlingsson, Ulfar. "Microkernels." Interface Design. 2 December 1996. Cornell University. 26 July, 2006. <http://www.cs.cornell.edu/home/ulfar/ukernel/ukernel.html>

[5] "Features." Mac OS X Server. Apple Computer, Inc. 4 August, 2006. <http://www.apple.com/uk/server/macosx/features/>

[6] "INRIA at the Heart of Grid Computing Research." INRIA. 18 November 2002. INRIA. 3 August 2006. <http://www.inria.fr/presse/themes/gridcomputing/grid1.en.html>

[7] "Kernel (computer science)." Wikipedia. 1 August, 2006. Wikipedia.org. 4 August, 2006. <http://en.wikipedia.org/wiki/Kernel_%28computer_science%29>

[8] Koren, Oded. "A Study of the Linux Kernel Evolution." ACM SIGOPS Operating Systems Review (2006): Volume 40, Issue 2, pp. 110-112. 11 July, 2006.

[9] "L4 Microkernel Family." AllExperts. About.com. 25 July, 2006. <http://experts.about.com/e/l/l/L4_microkernel_family.htm>

[10] "Microkernel." Wikipedia. 23 July, 2006. Wikipedia.org. 25 July, 2006. <http://en.wikipedia.org/wiki/Microkernel>

[11] Minnich, Ronald G. "Right-Weight Kernels: An Off-the-Shelf Alternative to Custom Light-Weight Kernels." ACM SIGOPS Operating Systems Review (2006): Volume 40, Issue 2, pp. 22-28. 11 July, 2006.

[12] "Monolithic kernel." Wikipedia. 21 July, 2006. Wikipedia.org. 25 July, 2006. <http://en.wikipedia.org/wiki/Monolithic_kernel>

[13] Tanenbaum, Andrew S. "Can We Make Operating Systems Reliable and Secure?" Computer (2006): Volume 39, Issue 5. 4 July, 2006

[14] Uhlig, Volkmar. "Scalability of Microkernel-Based Systems." L4Ka Publications. 15 June, 2005. University of Karlsruhe. 25 July, 2006. <http://l4ka.org/publications/paper.php?docid=1866>

[15] Veiga, John. "The Pros and Cons of Unix and Windows Security Policies." IT Professional (2000): Volume 2, Issue 5. 4 July, 2006.